Logistic Regression

(last updated on 2011-02-17)

As usual, Wikipedia's

article on logistic regression is a good starting point. This short article

provides a practical and quick guide to the application of logistic regression.

Usage

Logistic regression models the relationship between a binary parameter (i.e. dichotomous,

e.g. alive or dead) and other parameters that are assumed to be related. In other

words, with a logistic regression model and given predicting parameters, one can

predict the probability of a certain event. For example, one can use a logistic

regression model to predict the probability of person's death in 5 years based

on parameters such as age, gender, smoking time, number of heart attacks, etc.

Fundamentals

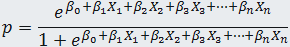

Since most statistical software packages provide all the computations, users usually

only need to understand the concepts and some fundamental mathematics behind the

logistic regression. The outcome of logistic regression is:

where p is the probability for the event to occur, xi is a predicting

parameter, β0 is the "intercept", and β1 ,

β2 , β3, and so on, are the regression coefficients. The predicting

parameters can be metric, binary or ordinal. In the latter two cases, some

transformation will be needed to generate design (i.e. dummy) variables to represent

them.

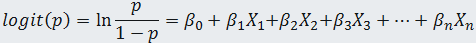

The key element in logistic regression is the logit transformation defined as following:

where g(x) = β0 + β0x0 + β1x1

+ ... + βnxn is called logit. This transformation enables

logistic regression to use many mathematical elements of linear regression.

The above equation is also the link function for logistic regression. Link

function yields linear function of the independent variable for the dependent.

Model Building Steps

- Use univariate analysis (t-test or logistic regression) to select relevant variables.

- If necessary, assess the linearity of the variables.

- If necessary, assess the interactions among the selected variables.

- Build multi-variate model.

One popular deterministic approach to select variables is stepwise logistic regression.

It includes backward and forward stepwise logistic regressions.

Assessing the model

The essence of assessing logistic model is the same as that of any other model assessment

– comparing the predicted with the observed. They include the following methods:

- Perrson Chi-square statisitc.

- Deviance.

- Hosmer-Lemeshow test that is essentially a variant of Chi-square statistic, but

much more practical because in most cases Chi-square test assumptions (e.g. m-asymptotic)

are not met.

Model Interpretation

Coefficients β0 , β1 , β2 , β3,

...βn are generated by the regression and each of them(βi)

has its own significance level - pi. Just like other statistical

analysis, pi shows whether βi makes a significant difference

from assuming βi = 0. There are different ways to assess the significance

hence resulting in different p’s, but all of them have the same objective. They

just approach it differently. They include deviance test, Wald test and SCORE test.

The sign of each estimated coefficient tells whether its corresponding predicting

parameter contributes positively or negatively to the occurrence of the event.

This short article was originally written after extensive communication with Robert

TV Kung, PhD and Douglas McNair, MD, PhD who were leading an effort to apply logistic

regression to a sophisticated medical device clinical trial.